Introduction:

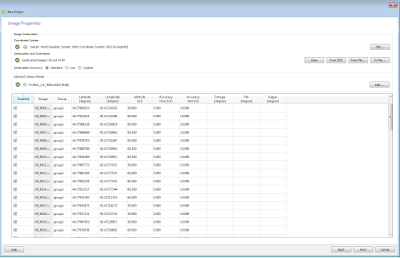

This week our objective was to navigate through dense woods to a series of predetermined points, marked with flags, with the maps we made back in the navigational map design lab. For navigating we would have a set of 5 UTM coordinates, and a GPS unit set to track our route as well as points (the flags).

Study Area

The study area was The Priory, a dorm hall off of the UWEC campus, set on 120 acres of forest, surprisingly close to campus. The wooded area does have trails, benches, and a variety of flora and fauna throughout. In addition to the plants and animals the priory has a variety of geospatial features including, rivers, hills, V-shaped valleys, steep inclines and quite a few sharp plants.

|

| Figure 1. The Priory and the Priory Woods. |

|

| Figure 2. Dr Hupy explaining the GPS Unit. |

The class met on at the Priory parking lot, the sun was shining and their was a chance of rain. But we were excited non-the-less. After receiving our maps, which had been printed out for us, we attempted to determine the where the points were using the UTM grid on our maps to make for easy navigation. We ran into a problem right away as one of our maps did not print, inconveniently the map that did not print correctly was our UTM grid map. So with quick thinking we pulled up the map on our phones and cross referenced the points to the map we did have.

After marking the points of 5 pairs of UTM coordinates and receiving a tutorial on how to mark the points once we got to each one (Fig 2), we set off into the woods!

We circled the non-forested area until we found a trial that would, presumably, take us closer to the points, having never been to the Priory before we were all a little lost. We made it into the interior of the woods rather quickly by following a trail which was marked with pink flamingos (not a clue as to why they were out there). When the trail stopped the woods opened up and we started looking for our first point (Fig 3,4).

|

| Fig 3. The first clearing of the woods. |

|

| Fig 4. Looking for our first point. |

We stopped to figure out the GPS and take another look at the coordinates (Fig 5). It took us a little while to figure out which direction cause the set of numbers to increase or decrease when we went in the correct direction.

|

| Figure 5. The GPS and the Coordinates. |

After losing the trail, and beginning to make a new one of our own, we figured out how to get to our first point below (Fig 6). The vegetation was quite thick in order to get to this point, many small and narrow young trees were in our way and had to be traversed in addition to many bushes and branches with very sharp thorns.

|

| Fig 6. The first point, marked with a flag. |

The second point was not close by at all, and after back tracking and stumbling around for a while we began to head in the correct direction. Which was down into a valley (Fig 7).

|

| Fig 7. The beginning of the trip into the valley. |

Once into the valley, the going got a little easier, the brush cleared out and only tall, mostly dead trees cluttered the area. Many of the dead trees had fallen over and lay in our path, so we had to climb over them (and under) to get to the second point.

|

| Fig 8. Point 2. under a fallen log off the main branch of the valley. |

For our third point we had to continue traveling down the base of the valley and towards the interstate to locate the flag. We got close a few times and realized that in order to get to the point we would have to change our elevation(Fig 9). This seemed like an easy task however the hillside we had to go up was steep and fragile, every step we took caused us to have to balance. Additionally, many dead trees were on the hill so reaching for support was a bad idea, a few of them had such poor support that the trees feel right over as we passed. And of course more thorny plants, after a while we got to the top of the hill (Fig 10)

|

| Fig 10, The uphill climb, about half way up the hillside. The hills incline was not captured with justice here. |

|

| Figure 10. Point 3. Dense vegetation at the top of the hill and a lot of thorny plants |

Now we had two points left, we began to get a little tired and hot from all that climbing, but we pressed on. After climbing out of the other side of the valley we back tracked (unknowingly) to point one, and then from there we went towards point 4. The back tracking was quite a bit farther than the distance we should have been able to go to get to the 4th point, and was largely inefficient, as we climbed out of the valley to get back into a different valley, but at least this time we had a stream (Fig 11)

|

| Fig 11. Point 4 with a stream |

After Point 4, things broke down a little, we were tired and hot, and we wanted to take a break. But we knew we just had to find one last point in order to go home. So we set off, but we ended up going the wrong way, for a while (about 35-45-minutes!). Which did not help the moral situation. But we did get to see a lot of the priory grounds, so we had that going for us.

For the 5th point we actually had to get back into the valley we had climbed out of, walk about 100 meters back past point 4, and towards the highway, climb another steep slope, and then wander on a hill top for a little bit.

As we became more and more tired and hot, we had all gone direction blind, and we had gotten disoriented. None of us knew where to go to begin with, but our sense of direction had also been eroded away. So after what seemed like an eternity atop that hill we finally stumbled on the 5th point* (* not shown due to being hot and tired, and forgetful).

After a long trek out of the woods we got to go home. As you can see from our track log in the map below, we went all over the place. One thing to note is that the tracking log did get turned off for an unknown reason during the activity, what is represented here is only some of the track log points.

During this activity we had a great group dynamic, and we worked together well. The points did seem frustrating to find on more than one occasion, but we never blamed each other, just kept going. It is good to have a positive attitude when navigating due to the fact that many issues do come up, and in order to continue on, one has to think about the end outcome. That being said, the error for this project was all human and procedural. The issues we faced could have been avoided and will be next time we are in the field navigating. Being it: not having the correct map, having errors determining the GPS when using it, or accidentally turning off your data points. This run was a great point of what can be done and what not to do. In the end we did find all 5 points, and it did take us the longest out of all of the other groups. But we did not give up, and we pervaded in the end.